New SDXL 1.0 release allows hi-res AI image synthesis that can run on a local machine.

See full article...

See full article...

It would be nice to have some approximate numbers here.SDXL also offers drawbacks when running locally on consumer hardware, such as higher memory requirements and slower generation times

I suspect it depends to some extent on each user's TPL (total patience level).It would be nice to have some approximate numbers here.

Every major advancement in media also seems to be an advancement in depravity in general, and I'm not sure how I'm going to feel about it in the next few years. To paraphrase: I just don't feel like I have any choice but to ride this wave while the scientists are busy figuring out how to make it work instead of asking themselves if they should.And here we thought that social media had plumbed the depths of human depravity. Gentle reader, you ain't seen nothin' yet.

There's a really good summary of recent updates to Stable Diffusion here. Very much worth a read.Up to now, I have found that Midjourney is about 3 months ahead of Stable Diffusion in terms of prompt coherence, image detail, and image quality. But I haven't compared them for a little while - has anything changed?

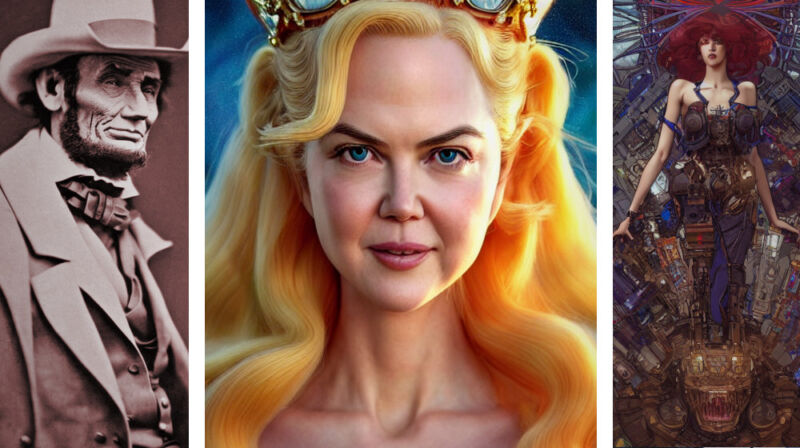

Nah, its just the author's preference. Tons of examples, Kidman is nowhere to be seen.The first test of any new image-creating AI program seems to be to have it create an image of Nicole Kidman (or a vague resemblance thereof).

According to the release announcement, "This two-stage architecture allows for robustness in image generation without compromising on speed or requiring excess compute resources. SDXL 1.0 should work effectively on consumer GPUs with 8GB VRAM or readily available cloud instances."It would be nice to have some approximate numbers here.

In the past I know Mr. Edwards has used the same prompt (maybe even seeds) for different generations of the software. I wouldn't be surprised if this is the case for the familiarity of certain images in the article series.Nah, its just the author's preference. Tons of examples, Kidman is nowhere to be seen.

You might look into the ControlNet stuff. I haven't played with it myself, but supposedly it's good for distinguishing/manipulating aspects like subject vs. background, poses, depth (in 3D), etc.The blending of two inputs seems to be extremely useful. Often times one wants to have a subject and a scene, for example and it's difficult (in my very limited experience) to merge those well. The county fair is coming up, perhaps I should revisit the Stability installation I played with a year ago and see what can be cooked up in the digital art realm.

Clearly a natural born Ars forum member. Extra fingers for faster typing."A man that holds a sign saying Ars Technica".... and 6 or 7 fingers on each hand.

I followed the unstable diffusion NSFW discord a while ago and occasionally peek in to see what they are up to. Some interesting stuff for sure. This box has been opened and will never be closed again.And here we thought that social media had plumbed the depths of human depravity. Gentle reader, you ain't seen nothin' yet.

The Internet is for porn, and so is AI. I doubt OP was concerned about NSFW, it seems to be about "stolen" pictures.I followed the unstable diffusion NSFW discord a while ago and occasionally peek in to see what they are up to. Some interesting stuff for sure. This box has been opened and will never be closed again.

It's true, I was attempting to replicate the now-famous "Stable Diffusion lady" from my original Stable Diffusion article last year, but with SDXL.In the past I know Mr. Edwards has used the same prompt (maybe even seeds) for different generations of the software. I wouldn't be surprised if this is the case for the familiarity of certain images in the article series.

I suppose this could be a younger version. She has the same evil eyebrows.It's true, I was attempting to replicate the now-famous "Stable Diffusion lady" from my original Stable Diffusion article last year, but with SDXL.

I suppose this could be a younger version. She has the same evil eyebrows.

Still, "techinica?" It even takes liberties with specific text prompts?

I can't wait for the images of a certain would be president being french kissed by another inmate with spider web tattoos to start showing up. Is that the kind of depravity you meant?Every major advancement in media also seems to be an advancement in depravity in general, and I'm not sure how I'm going to feel about it in the next few years. To paraphrase: I just don't feel like I have any choice but to ride this wave while the scientists are busy figuring out how to make it work instead of asking themselves if they should.

Why is it so easy to tell that an image is AI generated?

No, I meant depravity I wouldn't enjoy seeing.I can't wait for the images of a certain would be president being french kissed by another inmate with spider web tattoos to start showing up. Is that the kind of depravity you meant?

I wish I had the money for this. Main girl on the image looks like an eviler version of late 90s Nicole Kidman.

You need both decent GPU/CPU speeds and lots of vRAM as well as regular RAM.It would be nice to have some approximate numbers here.

Because the image generation doesn't understand text. It knows the general patterns of text, but it doesn't understand what individual letters are or what words are, and such.How could a text engine that was explicitly told to show a sign with "Ars Technica" actually get the spelling wrong??

All I have these days is an S22 but honestly makes me want to make it a medium term project.It requires a decent rig to run fast, but it's free and will probably run on anything if you have the patience.

It requires a decent rig to run fast, but it's free and will probably run on anything if you have the patience.

In the past I know Mr. Edwards has used the same prompt (maybe even seeds) for different generations of the software. I wouldn't be surprised if this is the case for the familiarity of certain images in the article series.

Because we've barely scratched the surface of what models like these are really capable of. In the blink of an eye, we've gone from barely coherent collections of almost human elements to fantastically detailed and coherent images of virtually anything you can type out. This tech is moving extremely fast, so it won't be long before wonky hands are no longer a tell.Why is it so easy to tell that an image is AI generated?

I just got a local instance running. Taking requests.