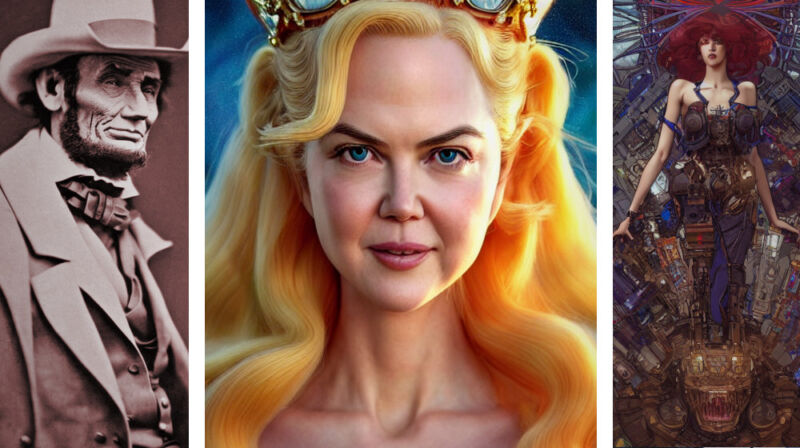

AI image generation is here in a big way. A newly released open source image synthesis model called Stable Diffusion allows anyone with a PC and a decent GPU to conjure up almost any visual reality they can imagine. It can imitate virtually any visual style, and if you feed it a descriptive phrase, the results appear on your screen like magic.

Some artists are delighted by the prospect, others aren't happy about it, and society at large still seems largely unaware of the rapidly evolving tech revolution taking place through communities on Twitter, Discord, and Github. Image synthesis arguably brings implications as big as the invention of the camera—or perhaps the creation of visual art itself. Even our sense of history might be at stake, depending on how things shake out. Either way, Stable Diffusion is leading a new wave of deep learning creative tools that are poised to revolutionize the creation of visual media.

The rise of deep learning image synthesis

The "Stable Diffusion" branding is the brainchild of Emad Mostaque, a London-based former hedge fund manager whose aim is to bring novel applications of deep learning to the masses through his company, Stability AI. The technology was developed by researchers at the CompVis group at Ludwig Maximilian University of Munich. But the roots of modern image synthesis date back to 2014, and Stable Diffusion wasn't the first image synthesis model (ISM) to make waves this year.

In April 2022, OpenAI announced DALL-E 2, which shocked social media with its ability to transform a scene written in words (called a “prompt”) into myriad visual styles that can be fantastic, photorealistic, or even mundane. People with privileged access to the closed-off tool generated astronauts on horseback, teddy bears buying bread in ancient Egypt, novel sculptures in the style of famous artists, and much more.

Not long after DALL-E 2, Google and Meta announced their own text-to-image AI models. MidJourney, available as a Discord server since March 2022 and open to the public a few months later, charges for access and achieves similar effects but with a more painterly and illustrative quality as the default.

Then there's Stable Diffusion. On August 22, Stability AI released its open source image generation model that arguably matches DALL-E 2 in quality. It also launched its own commercial website, called DreamStudio, that sells access to compute time for generating images with Stable Diffusion. Unlike DALL-E 2, anyone can use it, and since the Stable Diffusion code is open source, projects can build off it with few restrictions.

In the past week alone, dozens of projects that take Stable Diffusion in radical new directions have sprung up. And people have achieved unexpected results using a technique called "img2img" that has "upgraded" MS-DOS game art, converted Minecraft graphics into realistic ones, transformed a scene from Aladdin into 3D, translated childlike scribbles into rich illustrations, and much more. Image synthesis may bring the capacity to richly visualize ideas to a mass audience, lowering barriers to entry while also accelerating the capabilities of artists that embrace the technology, much like Adobe Photoshop did in the 1990s.

You can run Stable Diffusion locally yourself if you follow a series of somewhat arcane steps. For the past two weeks, we've been running it on a Windows PC with an Nvidia RTX 3060 12GB GPU. It can generate 512×512 images in about 10 seconds. On a 3090 Ti, that time goes down to four seconds per image. The interfaces keep evolving rapidly, too, going from crude command-line interfaces and Google Colab notebooks to more polished (but still complex) front-end GUIs, with much more polished interfaces coming soon. So if you're not technically inclined, hold tight: Easier solutions are on the way. And if all else fails, you can try a demo online.

reader comments

657 with